DLC86

Roadie

- Messages

- 666

Regarding this, I just discovered there's a russian guy on the NAM facebook group that has done quite a bit of experimentation on the architecture and he basically found a configuration that makes standard models almost as accurate as hyper accuracy models with a modest cpu increase (over standard models). Here's one of his posts about this: https://www.facebook.com/share/p/1BT4B7zSKq/First of all, thank you guys at TZ3000 for this awesome project! The incredibly fast parallel training of multiple output clips is so much better than local training and I'm saying that as someone with an RTX 4090 GPU.

Here would be my list of wishes:

Since I've only created this code file today, I'm not sure if the code above uses the correct lr and lr_decay values (I used to change them in the def train(...) section) for the complex training. I'd be grateful If an experienced python programmer could chime in and confirm if the following lines work as intended in the code that I posted above:

- Bulk Downloading: Please add a download button to the "Your Tones" page so that we can mark all the trained models that we want to download at once. Maybe I'm missing something here, but so far, I have to click on every model separately and only then am I able to download it.

- Bulk Uploading: It should work the same way as choosing many different output clips for the local trainer, where you can define a core set of descriptions and then start the training for all of them.

- Multi Architecture Training: I usually train standard and complex models for each output clip and I'd love to have that ability on TZ3000 as well.

- Basic Parametric Models: Since most guitar pedals and poweramps have three or less knobs, it would be nice to train them as parametric models instead of sets of 50+ captures.

- Hyper Accuracy Mode: A couple of guys have already mentioned it here and in my private messages that they'd like to try the hyper accuracy mode for their own captures. I've modified the core.py file to include a new architecture called "complex". Here it is: https://paste.ofcode.org/36GiiSFkJrSTkySVjCYnEM9

These lines are integrated in the def train() section (row 1418 to 1420). The intention behind this if statement is that lr should be 0.004 and lr_decay should be 0.007 (standard values) for any architecture, unless it's the complex one, then both variables need to be set to 0.001. This core.py file can be added to the following directory in case the github repository was installed via the pip install command via anaconda prompt:Python:if architecture == Architecture.COMPLEX: lr = 0.001 lr_decay = 0.001

C:\Users\ ... \anaconda3\Lib\site-packages\nam\train

Same goes for other local installation methods, simply open the nam\train folder, copy the code from the website above, create a new text file, paste the code in there, save it as core.py and copy the core.py file into the nam\train directory.

You guys at TZ3000 only have to include this section where the complex architecture is defined, as the lr and lr_decay variables are already exposed to the user:

Python:Architecture.COMPLEX: { "layers_configs": [ { "input_size": 1, "condition_size": 1, "channels": 32, "head_size": 8, "kernel_size": 3, "dilations": [1, 2, 4, 8, 16, 32, 64, 128, 256, 512, 1, 2, 4, 8, 16, 32, 64, 128, 256, 512], "activation": "Tanh", "gated": False, "head_bias": False, }, { "condition_size": 1, "input_size": 32, "channels": 8, "head_size": 1, "kernel_size": 3, "dilations": [1, 2, 4, 8, 16, 32, 64, 128, 256, 512, 1, 2, 4, 8, 16, 32, 64, 128, 256, 512], "activation": "Tanh", "gated": False, "head_bias": True, }, ], "head_scale": 0.02, },

So far, I've settled for 1200 epochs for the hyper accuracy training, mainly because I preferred models with longer training times, but this is based on an experiment with two output clips and various epoch counts (800, 1000, 1200, 1400, 1600). It's not very scientific, I know, so maybe 1000 epochs could be good enough.

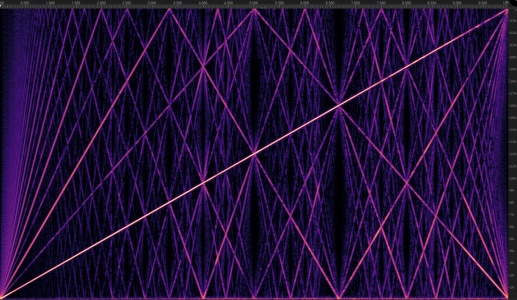

He also made a test tone file that includes some high frequency sine sweeps and that seems to improve the aliasing performance quite a bit: https://www.facebook.com/share/p/18EKHpbsQq/

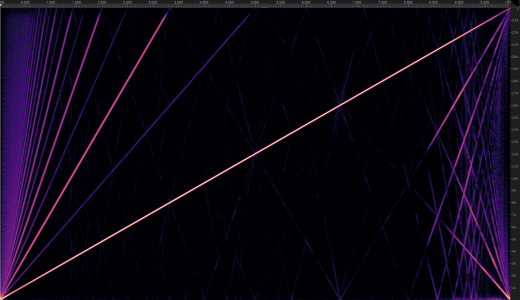

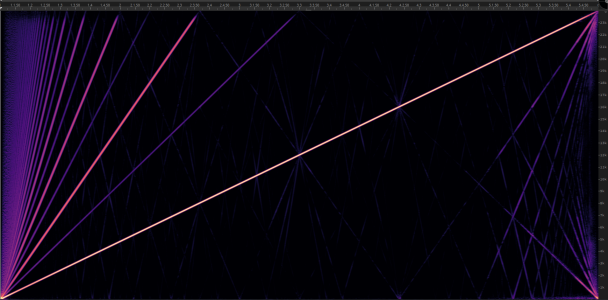

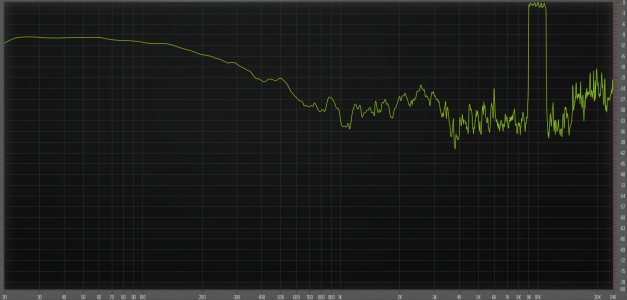

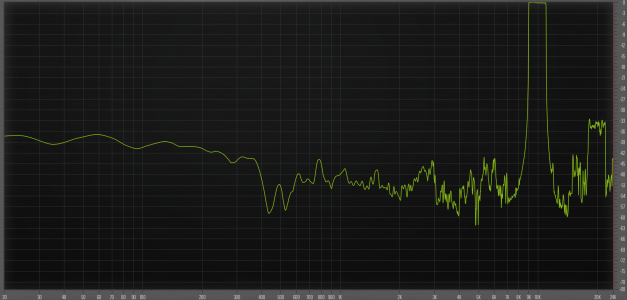

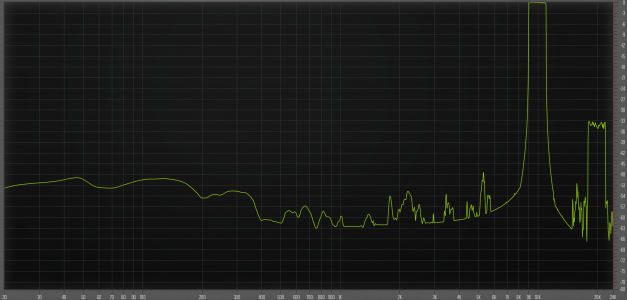

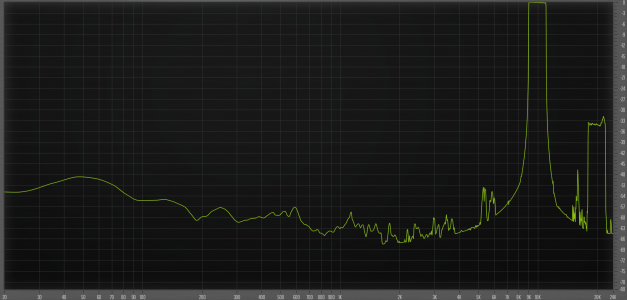

I just tested the models he shared and here's the impressive result:

"classic input"

"super input" + x standard architecture