Slammin Mofo

Groupie

- Messages

- 29

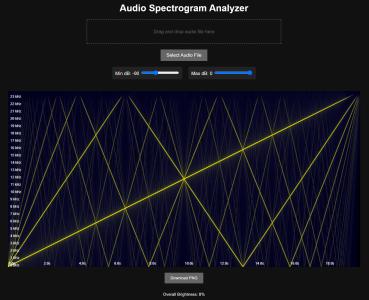

Ah that sucks... I'm also getting an error message inside the terminal window that the input file does not match any known standard input files, but the GUI gives me a slightly more detailed message:Honestly, I don't know

I've tried too a bit to make it work locally but haven't found where to tell the trainer to disable the checks on the input file. But the message you get is a bit strange indeed, in my case it told me it was the wrong input file.

The full version of the trainer should allow any type of inputs but the instructions are not clear on where to put the config files, so I gave up on that too.

I assume it has to do with the hash codes above, the new trainig file would need new hashes to correctly identify start and end points.

Anyway, thanks for the low aliasing training signal! If only TZ3000 would offer hyper accuracy training capabilites, I wouldn't even have the need to train the output clips locally.