Hello

@2dor ,

@MirrorProfiles and

@volkan

I created an account just to ask my question because I share

@volkan confusion.

TL;DR question:

- I am quoting

@2dor critical information which is the basis of this whole calibration stuff; the plugin author (here for instance Neural DSP) provide a calibration figure based on THEIR audio interface (12.2 dbu in this case).

Are they also setting their audio interface gain knob to 0 ?

I went over this in the Mayer plugin thread - but the main issue here is that plugins have no idea what the mapping from real world voltage level to digital level is because audio interfaces all differ in their circuit design, converter choice, etc.

With amp modeling you need to have some sense of what voltage the digital signal represents, so they will assume a default mapping. Allegedly for NeuralDSP plugins that mapping is a 12.2 dBu analog signal corresponds to a 0dBFS digital signal in the digital domain - that's an ASSUMPTION by the plugin.

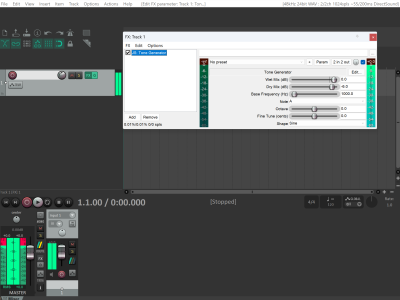

With NAM plugin - you can change this mapping directly in the calibration settings.

Other plugins assume other mappings.

Now, you as a user, what's the easiest way to know what the mapping is for your particular interface? Well, most audio interfaces specify in their documentation, their maximum input dBu analog level at the lowest gain setting. In other words, they are telling you, if you input a <whatever their max dbU spec is> signal - that results in a 0dbFS in the digital domain.

This is where the "lowest gain" thing came from - because it's easy then for you to know the default mapping without measuring anything.

It's not that NDSP sets "their "interface to the lowest gain.

Elaborated question:

This whole mess of "set your audio interface input gain to zero" started from community, not from plugin devs (to my knowledge), they also recommend (like Scuffhamamps and many) to set their audio interface gain enough to avoid clipping and maximising SNR.

Yes, as said above, mainly to avoid having to measure anything.

If this has started from plugin Dev to ensure the most homogeneous user experience they would have clearly state that we authors of this plugin we set our audio interface gain to zero to have a reference based on the audio interface (used during development) headroom at zero gain, and we recommend you (users) to do the same on your audio interface that we are on the same page.

Even if they devs said that -it would still not be homogenous unless you used the exact same interface. Audio interface all have different specs.

What would help is for plugin devs to do the same thing as the NAM plugin to start and just allow you to easily put your own custom mapping of dBu => 0dBFS

In order for this to become transparent to users - there would have to be an audio API to query that signal level mapping from the interface itself (and have that mapping updated as you change input gain as well)

because of the lack of this statement from plugin dev, I speculate about 2 options:

option 1- they also set their audio interface gain in a way to maximise SNR and avoid clipping but provide the audio interface headroom at gain zero just for (but worthless) reference. Thus our reference point that we consider golden is actually wrong, and we are all mislead by the plugin calibration data.

As said above, they need to assume SOME mapping - for example if you are doing component modeling, you would like to know what voltage level your model is getting, a digital signal level does not provide that information - so they assume a mapping. Potentially, they may have arrived at that default mapping by averaging the input specs of all current popular audio interfaces.

option 2- Plugin Devs in general have a special/high-end audio interfaces that allow them to still maximising the SNR ration by turning the gain knob + provide a readout of the headroom (i.e maximum input ) left at that gain setting (essentially an automated way of what

@MirrorProfiles is suggesting with the sine-wave method). and then this calibration method at gain set to 0 of us end-users is still valid.

Calibration is calibration - the gain at 0 its a shortcut for users to avoid having to measure anything and just read their interface spec sheets to obtain the correct mapping for their interfaces. If your input gain knob is digitally controlled and the interface reports how many dBs is adding then you can also just adjust your calibration by that amount without having to measure.

But the most concrete way to get this mapping for your particular interface at whatever input gain you are using is, well to just measure it.

Hope I expressed well my question (English in not my main language, not even the second).

kindly,

LiCoRn

Hope the explanations above help.